Scale Business Processes With Operational ETL

Automate Salesforce data integration, file data preparation, and B2B data sharing

No credit card • Full Trial for 14-Days

You will probably like us if your use case involves

You will probably like us if your use case involves-

Salesforce IntegrationOur bi-directional Salesforce connector rocks!LEARN MORE

-

File Data PreparationAutomate file data ingestion, cleansing, and normalization.LEARN MORE

-

REST API IngestionWe’ve yet come across an API we can’t ingest from!LEARN MORE

-

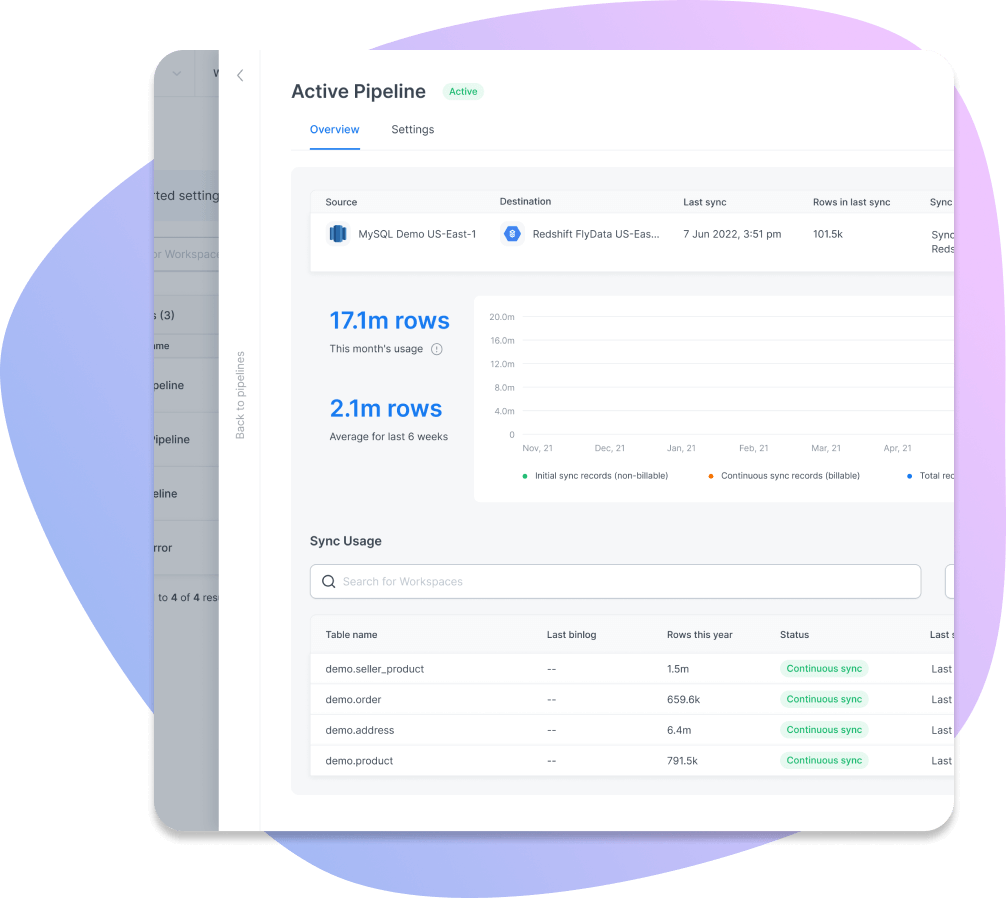

Database ReplicationPower data products with 60-second CDC replication.LEARN MORE

We may not be a good fit if you’re looking for

We may not be a good fit if you’re looking for-

100s of Native ConnectorsWe’re not in the connectors race, sorry. We do quality, not quantity.

-

Code-heavy SolutionWe exist for users that don’t like spending their days debugging scripts.

-

Self-serve SolutionA Solution Engineer will quickly tell you if we’re a good fit. Then lean on us as much or as little as you like.

-

Trigger-based PipelinesWe don’t do trigger or event-based pipelines. We can schedule ETL pipelines for every 5 minutes and CDC pipelines for every 60-seconds.

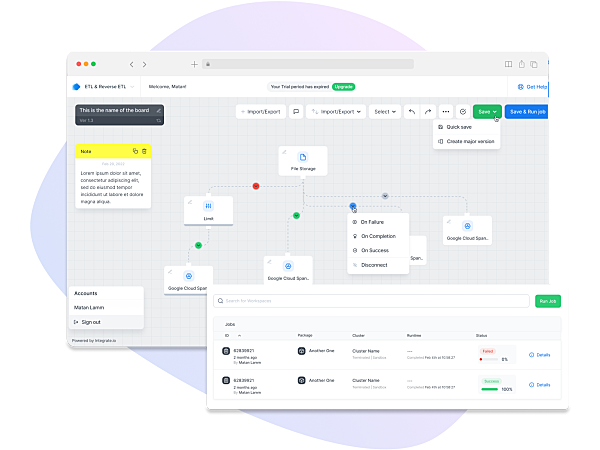

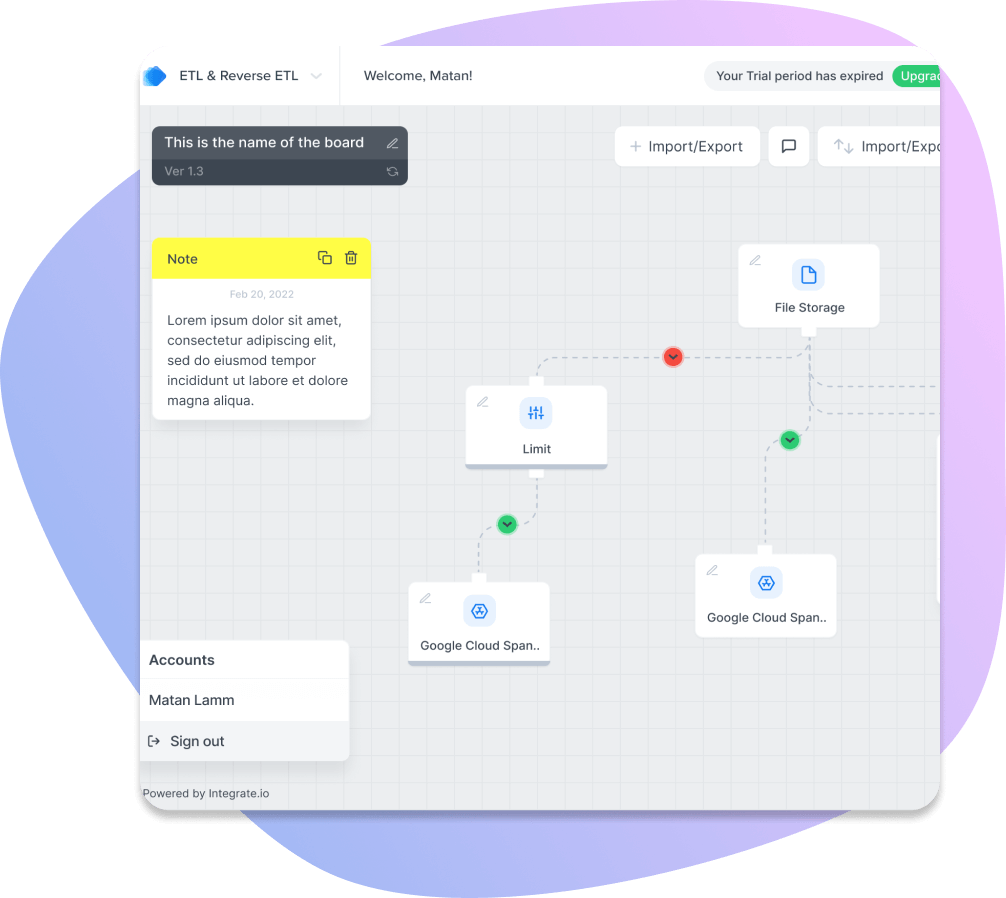

Pipelines In Minutes

Database Replication

-

Automate Manual ProcessesStreamline data

preparation and

sharing in minutes -

Operationalize

Your DataGet the right data

into your most

important systems -

Power Your

Data ProductsCreate data-based

internal & external

facing applications -

Future-Proof Your

Data JourneyScale to meet any

challenge with a

complete data platform

Data Sources & Destinations

for the leading integrations.

Achieve Your Goals.

Truly Uncommon Customer Support

We ensure your team's success by partnering with you from day one to truly understand your needs & desired outcomes. Our only goal... is to help you overachieve on yours.

Best Customer Service Ever!

“They have been the best customer service team I have ever worked with from any outside vendor.”

- Matthew P., Analytics Manager

Multiply Your Data Team Outcomes

Maximize your data team's output with all of the simple, powerful tools & connectors you’ll ever need in one low-code platform. Empower any size data team to deliver on time & under budget.

TRY IT FREE FOR 14 DAYS

Simplified Regulatory Compliance

The Unified Stack

for Modern Data Teams

Connect with us about using our no-code pipeline platform for your entire data journey